Watching the AI race is really something folks. Meta dropped its latest model this week and its a doozy! If their latest benchmarks are anything to go by, the company seems hell bent on blowing its competition out of the water, that too with open-source technology. If anything, it's a huge win for developers worldwide.

On the other hand, the world's richest man and compulsive shitposter Elon Musk has his AI startup, xAI, cooking up the next iterations of Grok. The company has slated a release for Grok 2 sometime in August, with plans for Grok 3 by December this year, that's a helluva turn around time.

Here is some AI news from the past week that's worth a read.

Meta's Latest Llama Model

Back when Meta first released its Llama series of AI models, the company said that having an open source platform leads to “better, safer products, faster innovation, and a healthier overall market”. They weren't wrong. Meta's Llama models have been narrowing the gap with its competitors.

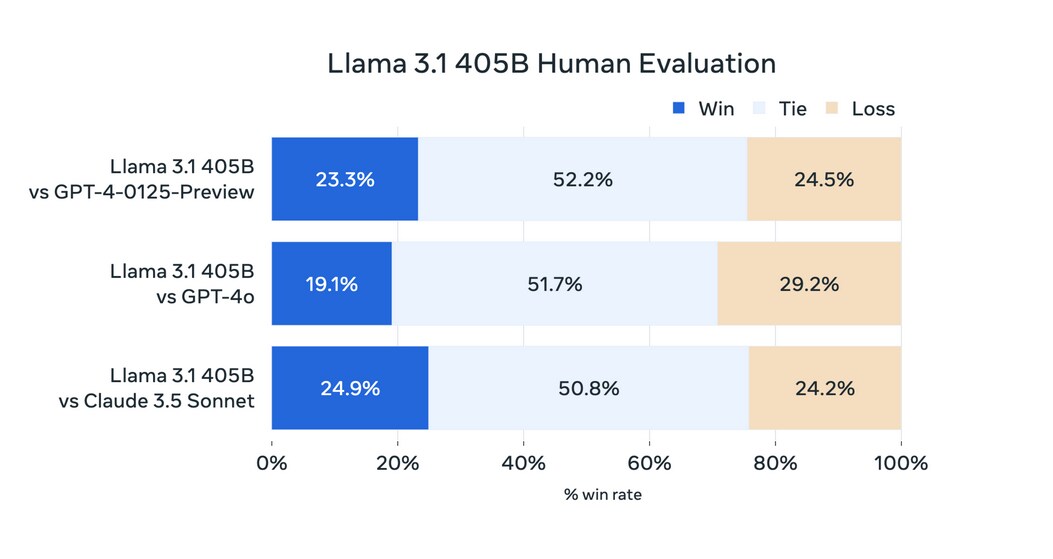

The excitement over Meta's new model is understandable, given that its benchmarks were leaked just a day ahead of its release, with people pointing to how the new model was able to beat OpenAI's GPT-4 and 4o as well as Anthropic's Claude 3.5 Sonnet in key AI benchmarks.

Officially, Meta's latest model, Llama 3.1 405B supports eight languages, has a context length of 128,000 and was trained on over 15 trillion tokens sourced from public data sets. Meta ended up using synthetic data to train the model as part of its supervised fine-tuning process.

Llama 3.1 405B can go toe-to-toe with some of the world's best AI models. (Source: Meta)

The company has even introduced the Llama Stack API for integration, supported by an ecosystem of several companies, including AWS, Nvidia, Databricks, Groq, Dell, Azure, and Google Cloud.

But the excitement around Meta's release isn't just for beating benchmarks and other more AI-focused companies. The release holds significance for the world's open-source model AI community.

Why? It's the first time that such a model is beating closed-source ones, that too those with so much money and backing behind it. Of course, one could (and should) argue that Meta has deeper pockets, given its size compared to companies like Anthropic, OpenAI or Mistral.

For a company as large as Meta, it would have been easier, and probably very cost effective, at least from a technical standpoint. But Meta founder Mark Zuckerberg detailed his reasoning for the open-source approach in a letter released alongside the announcement of 3.1 405B.

View this post on InstagramOne was to ensure Llama can develop into a “full ecosystem of tools, efficiency improvements, silicon optimizations, and other integrations”. The second being that he doesn't really think Meta won't really be holding onto a “competitive” edge, given the pace of development. Finally, and this one's the big one folks, good ol' Zuckerberg says selling access to AI models isn't our business model”. And you know what? That's true.

For a company that runs some of the world's largest social media platforms, Meta rakes in the cash through mostly one thing, advertising. They provide advertisers with some of the largest userbases in the world. That's access to a motherlode of data and people, all of whom are willing to spend money on things they like, want or need.

Honestly, why would Meta even bother to make people pay for access to AI models? Sure, they'd make a lot of money, but by making it open, they're crowd-sourcing innovation, which will likely make them more than enough money in the long run.

Elon Musk And xAI

Love him or hate him, Elon Musk sure knows how to throw money at a problem. Just look at xAI right now. The South African billionaire has been pouring money into his AI startup which is creating GrokAI.

It shouldn't come as any surprise though, the man has a lot of money to burn, a lot of people who want to burn it with him and are willing to go all the way. The company closed a Series B funding round in May, having raised $6 billion. That's not exactly chump change.

Earlier this week, the world's richest man held a poll on X asking whether he should have Tesla make an investment of $5 billion in xAI. Poll results showed that 67.9% believed he should. Now granted, a poll on X is likely meaningless but it goes to show that whether people are trolling or not, there is significant support for his AI startup.

But what about Tesla's shareholders and board members? The EV maker's shares haven't been doing too great in the past couple of weeks. The company's shares declined earlier this week when it announced its Q2 results. Overarchingly, its stock has declined just over 11% year-to-date.

Tesla shareholders must be feeling pretty sidelined after a disappointing Q2.

Musk throwing his own shenanigans into the mix can't be the easiest thing for shareholders to come to terms with. But one thing remains true, the world's richest shitposter is building a powerful AI and if Grok 3 does show up in December this year, xAI will have cemented itself as a force to be reckoned with in the industry.

In a tweet last Sunday, the billionaire posted on X (formerly Twitter) that his AI startup has launched the “Memphis Supercluster”. The supercomputer is equipped with 100,000 Nvidia H100 GPUs and is being touted by Musk as the “most powerful AI training cluster in the world”. The billionaire says he plans to release what he calls “he world's most powerful AI by every metric by December this year.”

Nice work by @xAI team, @X team, @Nvidia & supporting companies getting Memphis Supercluster training started at ~4:20am local time.

With 100k liquid-cooled H100s on a single RDMA fabric, it's the most powerful AI training cluster in the world!He's referring to Grok 3, the platform that him and his team at xAI are building. In a separate interview with Canadian psychologist Jordan Peterson, the world's richest man said that the latest iteration of his AI, Grok 2, will be released in August.

In the interview, Musk said that the latest iteration of Grok had been trained on 15,000 H100 GPUs. With the launch of the Memphis Supercluster, xAI will be training Grok 3 on nearly seven times the amount of GPUs.

"Grok 3.0 will be the most powerful A.I. in the world and we're hoping to release it by December".

一 Elon Musk pic.twitter.com/yVMTZWSDksBeyond Tomorrow is a weekly newsletter published every Saturday to give you a roundup of everything AI in the last week.

Essential Business Intelligence, Continuous LIVE TV, Sharp Market Insights, Practical Personal Finance Advice and Latest Stories — On NDTV Profit.